I remember a time when I was running datacenters and had absolute responsibility for and accountability for critical business systems.

I also remember some cringe-worthy times when things would go down, and I would be very quickly introduced to the cost of downtime. Dollars and cents (not to mention reputation) forever lost because of what amounted to human errors. Notice that I didn’t blame the technology.

We in the technology sector often spout off about all of our features and capabilities for redundancy, availability, security, automation, and the like. We are so proud of our terms and capabilities, that we often neglect to look at what it really means for a business to implement these capabilities into their operations and culture.

Since the Internet lives forever, I’ll put some context on the title of this blog: On August 8th, 2016, Delta Airlines had a major computer system failure that blacked out flights and caused issues for many days after. The financial impact of this outage spills over from just the cost to Delta and their capability to service customers, but also in the impact to existing customers missing flights, cargo not making it out, and on and on, says Peter McCallum, VP of Data Center Architecture, FalconStor Software.

In an interesting article: http://money.cnn.com/2016/08/08/technology/delta-airline-computer-failure/index.html?iid=ob_homepage_tech_pool, Thom Patterson from CNN notes that the real reason this kind of thing happens is due to “good old-fashioned screw-ups.” And I couldn’t agree more. My first reaction is that “I happen to know a product that could have prevented this!!” It’s a natural feeling that if only they used my product, my know-how, my awesomeness that this could have been avoided.

And that’s all a boat-load of garbage. This happens because of the massive amount complexity in enterprise systems, infrastructure, and the ecosystem of siloed services that has made it almost impossible to test systems and build contingency plans.

In the article, Patterson cites “airline experts” who state that there are three reasons why systems go down: no redundancy, hacking, and human error. I think it’s a little naïve to choose just those three, so I want to caveat something: every system today has the capability to have redundancy or high availability. The problem comes from not using it, or not testing it – or cutting budget and human resources. Let’s just shorten that list to “human error” and call it complete?

So now that we’ve nailed that culpability down, what do we do about it? I would argue that there are some current trends in IT in general that are contributing to these kinds of catastrophic events. Let’s talk financial first: our pursuit for the lowest possible cost systems (rampant commoditisation) is forcing one-time reliable vendors into the dumps with quality and interoperability. The “cloud” is offering infrastructure SLAs and hiding culpability for reliability (it’s no longer my fault, it’s my vendor’s fault.) And in IT, the desperate adherence to “the way we’ve always done it,” staunches innovation from product choice through operational controls.

Let me tell you how I know this: I worked for a rocket motor manufacturer where the disaster plan was: “Run. Don’t look back or your face might catch on fire, too.” I had to test data recoverability every week, system recoverability for critical systems monthly, and yearly do full datacenter failure drills. At least, that’s what my process docs told me. Did I do it? Of course, I did!! Before we invested in FalconStor we would call an actual failure a “drill” and so on. Am I admitting to anything horrible that my former employer should ask for my paycheck back? Heck no!! Time, resources, money, and impact to production are real reasons why you don’t “play” with systems to imagine a failure. Failures are all too real and close-at-hand.

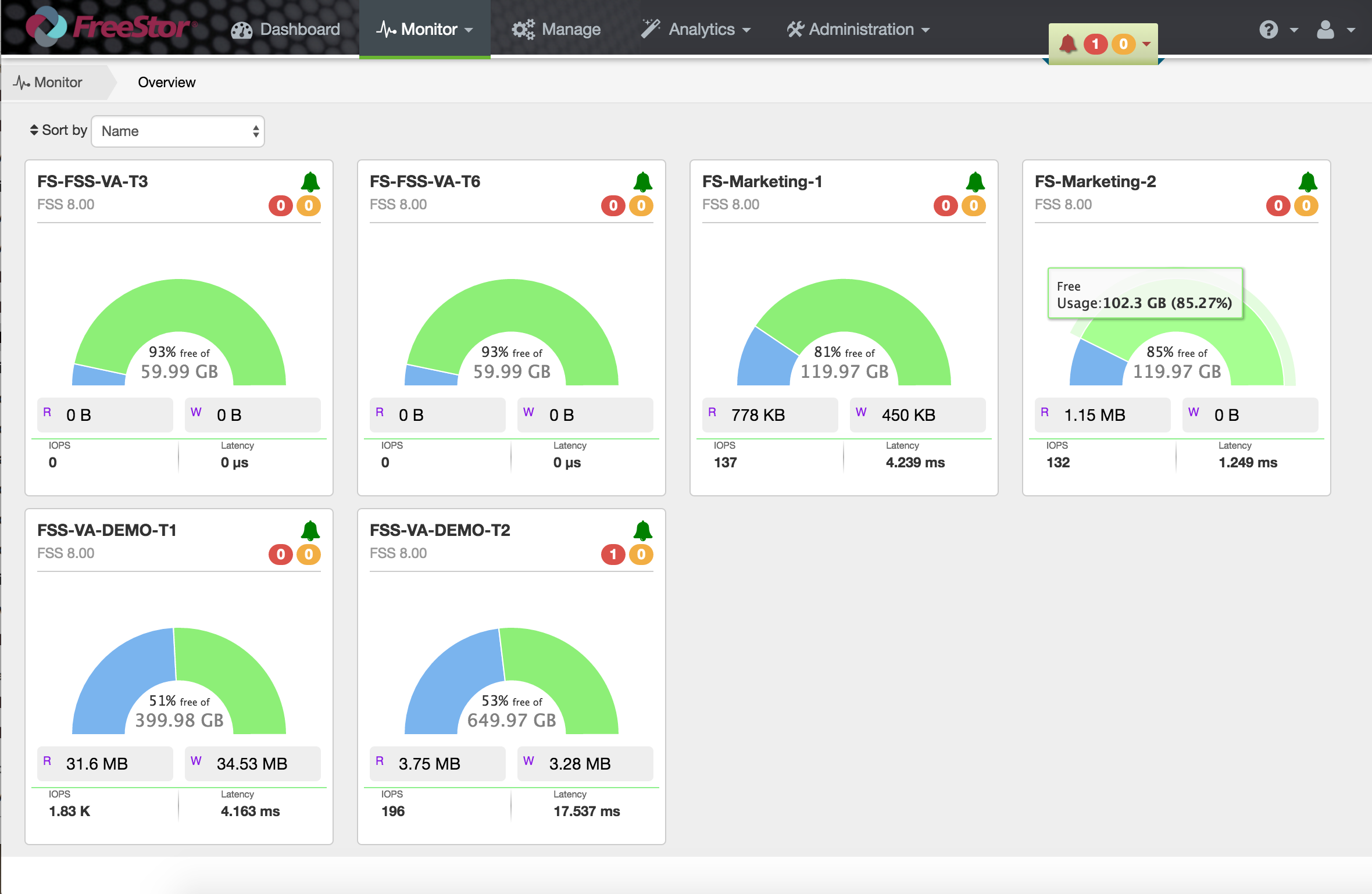

This is honestly the reason why we invested in FreeStor: to handle all the interoperability and disaster recovery execution and testing. FreeStor allowed me to test, document and automate disaster recovery scenarios all without impacting my production. Back then, and now, FreeStor was the only platform that allowed this capability at enterprise scale.

Before we had ITIL and DevOps initiatives, we had CMMI and OSHA regulations, combined with oversight by the missile defense agency and SARBOX. My auditors would give me a week to show them validation of process, and I could show them full datasets within minutes from my desk. I’m sure Delta had similar oversight. So how does a bonafide disaster happen when everyone can state a process, can execute to a process, and has all the tools to have near-zero-downtime systems? Because FreeStor was the abstraction layer on top of all my different arrays, running different protocols, and serving my physical and virtual servers across multiple datacenters… I had one, validated process to execute to every time rather than a salad-bar of tasks across dozens of tools.

In fact, FreeStor gave me a global 15-minute return-to-operations SLA across all of my datacenters as compared to 3 days in most cases from our corporate boys running “enterprise” systems.

The article concludes that for airlines to avoid these things in the future: “They can install more automated checkup systems. They can perform emergency drills by taking their systems offline during slow periods and going to their secondary and backup systems to make sure they are working properly”

I need to call these “experts” out. There is no “automated checkup system.” That’s a particular fantasy for homogenous systems that these “all-in-one” converged and hyper-converged systems tout. There are too many interconnects and systems outside of the purview of these mini wonder-boxes. (Keep in mind that Delta blamed non-redundant power systems for the outage…) This problem was also solved for me with FreeStor’s global analytics, giving me insight across the board – not saying it was an early warning system, but it was darn good at telling me about my whole infrastructure.

I do like the emergency drills concept, but how would one do this without impacting production? I know how I can do it, but I also know that many of my prospects have tools that “almost” do this today with their preferred vendors, but can’t quite test DR (yeah, I’m looking at you EMC/SRDF/RecoverPoint) in systems mimicking production. I had a prospect telling me that they had 4 petabytes of DR redundancy that they had no idea if they could actually recover to if push came to shove.

Note that the “experts” in the article stated, “during slow periods.” There are no slow periods. Apparently, the Delta disaster occurred at 2:30 in the morning – it shouldn’t get more “slow” than that! Airlines and most modern business are 24/7 with almost zero slow-down. Legacy systems are used to having to shut down to test what would happen if a system failed.

So, if Delta Airlines calls me, asking to share my experience and offer suggestions (I am waiting by my phone…), I would share this with them:

- Review your technology with an open mind and heart: does it do what you think it does? If not – look for a new vendor and way to accomplish what you need. The old relationships won’t save you when disaster strikes because the commission check has been cashed.

- Look for a platform (not just a tool suite) that commoditises the technology by optimising interoperability and consistency in operations.

- Find a platform that will allow testing of data recovery, system recovery, service recovery, and datacenter recovery all without impacting production. If you can’t test your DR, you can’t provide an SLA that is real.

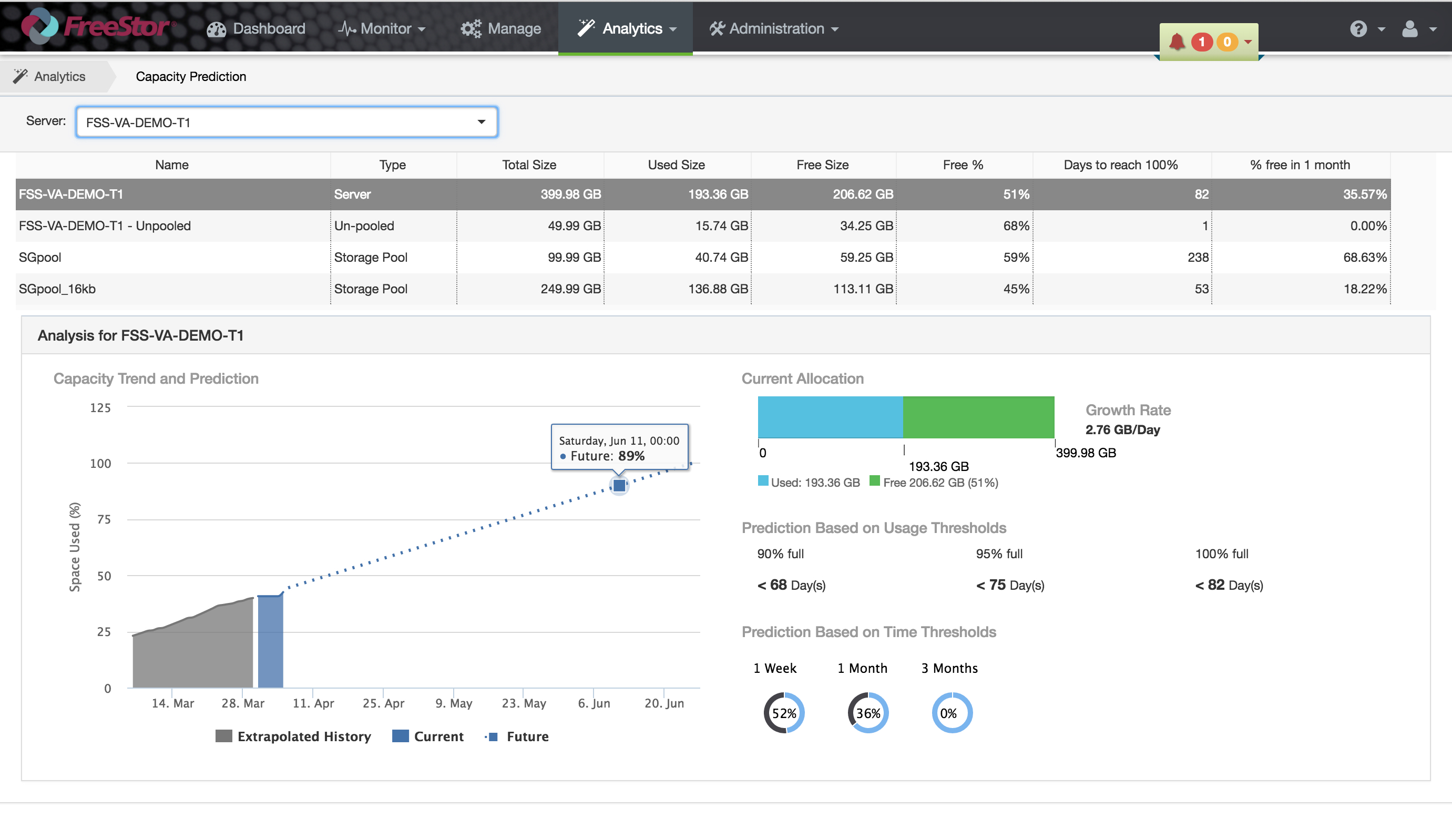

- Use an operational platform that takes historical and real-time data and correlates it through modern analytics to find weak spots and utilisation patterns to build appropriate SLAs and understand the real requirements of your business.

- Find an IT analytics platform that can correlate across ALL infrastructure systems, including cooling, power, security, etc… to get a holistic view of your environment.

- Don’t offload responsibility to the lowest-cost provider without understanding the underpinnings of their environment as well: If you can’t find a good DR plan for your object store, there is a good chance your cloud vendor can’t either. Just saying.

- Replication and redundancy are NOT high-availability. Replication (yes, even synchronous replication) means a Redundancy means you can fail twice (or more) before the dark settles in. If you need to be always-on, then you need an always-on technology.

- Finally, if you have a tool, use it. If the tool doesn’t work, find a new tool. I can’t tell you how many times I have heard from friends and customers “we standardised on this tool, but it doesn’t work. Can you fix it?” Drop the politics and admit when choices weren’t so great. Get the right solution in place to support your business, not your aspirations and relationships. Unless the relationship is with me.

If nothing else, seeing a true system outage occur to a massive company like Delta, should frighten the living daylights out of everyone else. We buy technology as if it is an insurance policy where we only find out our levels of coverage when a disaster happens. Inevitably, someone pretty low on the totem pole will get fired over what happened to Delta’s systems. Some poor shmoe hit a button, flipped a switch, blew a circuit. Or even crazier, someone was testing the system at a “slow” period and found a critical problem in what was an otherwise well-architected system. We might not ever know for sure.

But the call to action for the rest of us sitting in the airports writing blogs while waiting for our flights is this: rethink everything. Break the mold of buying technology that serves itself. Find and implement technology that serves your business. And most importantly: test that insurance policy regularly so that you know what your exposures are when the worst comes to pass. Because if it happened to Delta, chances are, it will happen to you.

The author of this blog is Peter McCallum, VP of Data Center Architecture, FalconStor Software

Comment on this article below or via Twitter: @IoTNow OR @jcIoTnow